Third, you need to ‘own the code’ even if the AI produced it. Don’t think that because AI produced the code it’s not yours. The AI tool doesn’t care (or know) about the intention of the code it is producing on your behalf. Even though you didn’t write it, the code is yours in the sense that it is doing (or is trying to do) what you want it to do. As a human it seems intuitive that the AI should understand the code given that it produced it, but it really doesn’t. I found that only by accepting that the code was mine, including any errors in it, could I properly interrogate it to check for errors. But also, if I’m going to use the code for any practical purpose, then it’s my name against the outcomes, so the sooner I take ownership the better.

(more…)

Tag: Modelling

Standardised and transparent model descriptions for agent-based models

Last month saw initial publication (although officially it is a May publication!) of the paper that came out of the agent-based modelling workshop in which I participated at iEMSs 2014.

Birgit Müller put in some great work to summarise our discussion and bring together the paper which addresses how we describe agent-based models. Standardised and transparent model descriptions for agent-based models: Current status and prospects has several highlights:

- We describe how agent-based models can be documented with different types of model descriptions.

- We differentiate eight purposes for which model descriptions are used.

- We evaluate the different description types on their utility for the different purposes.

- We conclude that no single description type alone can fulfil all purposes simultaneously.

- We suggest a minimum standard by combining particular description types.

To present our assessment on how well different purposes are met by alternative description types we produced the figure below. In the figure light grey indicates limited ability, medium grey indicates medium ability and dark grey high ability (an x indicates not applicable). Full details of this assessment are presented in the version of the diagram presented in an online supporting appendix.

Citation and abstract for the paper below. Any questions, or for a reprint, get in touch.

Müller, B., Balbi, S., Buchmann, C.M., de Sousa, L., Dressler, G., Groeneveld, J., Klassert, C.J., Quang Bao Le, Millington, J.D.A., Nolzen, H., Parker, D.C., Polhill, J.G., Schlüter, M., Schulze, J., Schwarz, N., Sun, Z., Taillandier, P. and Weise, H. (2014). Standardised and transparent model descriptions for agent-based models: Current status and prospects. Environmental Modelling & Software, 55, 156-163.

DOI: 10.1016/j.envsoft.2014.01.029

Abstract

Agent-based models are helpful to investigate complex dynamics in coupled human–natural systems. However, model assessment, model comparison and replication are hampered to a large extent by a lack of transparency and comprehensibility in model descriptions. In this article we address the question of whether an ideal standard for describing models exists. We first suggest a classification for structuring types of model descriptions. Secondly, we differentiate purposes for which model descriptions are important. Thirdly, we review the types of model descriptions and evaluate each on their utility for the purposes. Our evaluation finds that the choice of the appropriate model description type is purpose-dependent and that no single description type alone can fulfil all requirements simultaneously. However, we suggest a minimum standard of model description for good modelling practice, namely the provision of source code and an accessible natural language description, and argue for the development of a common standard.

Keywords

Agent-based modelling; Domain specific languages; Graphical representations; Model communication; Model comparison; Model development; Model design; Model replication; Standardised protocols

Aspiration, Attainment and Success accepted

Back in February last year I wrote a blog post describing my initial work using agent-based modelling to examine spatial patterns of school choice in some of London’s education authorities. Right at the start of this month I presented a summary of the development of that work at the IGU 2013 Conference on Applied GIS and Spatial Modelling (see the slideshare presentation below). And then this week I had a full paper with all the detailed analysis accepted by JASSS – the Journal of Artificial Societies and Social Simulation. Good news!

One of the interesting things we show with the model, which was not readily at the outset of our investigation, is that parent agents with above average but not very high spatial mobility fail to get their child into their preferred school more frequently than other parents – including those with lower mobility. This is partly due to the differing aspirations of parents to move house to ensure they live in appropriate neighbourhoods, given the use of distance (from home to school) to ration places at popular schools. In future, when better informed by individual-level data and used in combination with scenarios of different education policies, our modelling approach will allow us to more rigorously investigate the consequences of education policy for inequalities in access to education.

I’ve pasted the abstract below and because JASSS is freely available online you’ll be able to read the entire paper in a few months when it’s officially published. Any questions before then, just zap me an email.

Millington, J.D.A., Butler, T. and Hamnett, C. (forthcoming) Aspiration, Attainment and Success: An agent-based model of distance-based school allocation Journal of Artificial Societies and Social Simulation

Abstract

In recent years, UK governments have implemented policies that emphasise the ability of parents to choose which school they wish their child to attend. Inherently spatial school-place allocation rules in many areas have produced a geography of inequality between parents that succeed and fail to get their child into preferred schools based upon where they live. We present an agent-based simulation model developed to investigate the implications of distance-based school-place allocation policies. We show how a simple, abstract model can generate patterns of school popularity, performance and spatial distribution of pupils which are similar to those observed in local education authorities in London, UK. The model represents ‘school’ and ‘parent’ agents. Parental ‘aspiration’ to send their child to the best performing school (as opposed to other criteria) is a primary parent agent attribute in the model. This aspiration attribute is used as a means to constrain the location and movement of parent agents within the modelled environment. Results indicate that these location and movement constraints are needed to generate empirical patterns, and that patterns are generated most closely and consistently when schools agents differ in their ability to increase pupil attainment. Analysis of model output for simulations using these mechanisms shows how parent agents with above-average – but not very high – aspiration fail to get their child a place at their preferred school more frequently than other parent agents. We highlight the kinds of alternative school-place allocation rules and education system policies the model can be used to investigate.

Agent-based in Auckland

Today is my first day back in the UK after my trip to the AAG and University of Auckland. The end of May is perilously close so if I’m to keep my New Year’s resolution of one blog per month I’d better crack on with this now (as this week my time will be taken by attending and preparing for a workshop on Agency in Complex Information Systems at Imperial College and the International Geographical Union meeting in Leeds).

The main aim of visiting Assoc. Profs. David O’Sullivan and George Perry was to continue on from where we left off with our recent work on agent-based modelling (including that published inGeoforum on narrative explanation and in the ABM of Geographical systems book chapter). The paper on narrative explanation was actually initiated in a previous trip I made to Auckland in 2005 – takes a while for these things to come to fruition (but in my defence I was busy with other things for several years and there were other outcomes from that trip). Hopefully, such a concrete outcome as a publication from our modelling and discussions won’t be so long in coming this time around! In particular, we’ll continue to examine the idea that, just as we fail to maximise the value of spatial models by not using spatial analysis of their output, we fail to maximise the value of agent-based models by not using agent-based analysis of their output. Identifying means of understanding how agent interactions and attributes influence path dependency in system dynamics seems and interesting place to start…

While in Auckland I also made good progress on the manuscript I’m writing with John Wainwright on the value of agent-based modelling for integrating geographical understanding (which I mentioned previously). I presented the main ideas from this manuscript in a seminar to members of the School of Environment and got some useful feedback. The slides from my presentation are below and I’m sure I’ll discuss that more here in future.

Another area I made progress on with George is the continuing use of the Mediterranean disturbance-succession modelling platform developed during my PhD. We think there are some interesting questions we can use an enhanced version of the original model to investigate, including examining the controls on Mediterranean vegetation competition and succession during the Holocene. One of the most sensitive aspects of the original model was the importance of soil moisture for succession dynamics and I’ve started on updating the model to use the soil-water balance model employed in the LandClim model. Enhancing the model in this way will also improve it’s applicability to explore fire-vegetation interactions with human activity and to explore questions regarding fire-vegetation-terrain interactions (i.e., ecogeomorphology).

So, lots to be going on with and hopefully I’ll be able to visit again in another few years time.

AAG 2013

Last week I was in Los Angeles for my first ever Association of American Geographers Annual Meeting. I think I hadn’t been before because the US-IALE annual meeting is around the same time of year and attending that has made more sense in the last few years given my work on forest modelling in Michigan. As I’d heard previously, the meeting was huge – although not quite as crazy as it could have been.

Most of my participation at the meeting was related to the Land Systems Science Symposium sessions (which ran across four days) and the Agent-Based and Cellular Automata Model for Geographical Systems sessions. It was good to discuss and meet new people wrestling with similar issues to those in my own research. Unfortunately, the ABM sessions were scheduled for the last day which meant it was only late in the conference that I got to properly meet people I’d encountered online (e.g., Mike Batty, Andrew Crooks, Nick Magliocca) and others. Despite being scheduled for the last day there was a good turnout in the sessions and my presentation (below) seemed to go down well. Researchers from the group at George Mason University were most well-represented, with much of their work using the MASON modelling libraries (which I’m going to have to looking into more to continue the work initiated during my PhD).

It’s hard to concentrate on 20-minute paper sessions continuously for five days though, and I found the discussion panels and plenaries a nice relief, allowing a broader picture to develop. For example, David O’Sullivan (whom I’m currently visiting at the University of Auckland) chaired and interesting panel discussion on ABM for Land Use/Cover Change. Participants included, Chris Bone who discussed the need for better representation of model uncertainty from multiple simulation (via temporal variant-invariant analysis – coming soon in IJGIS); Dan Brown who suggested we’re missing mid-level models that are neither abstract ‘toys’ nor beholden to mimetic reproduction of specific empirical data (e.g., where are the ABM equivalents of von Thunen and Burgess type models?); and Moira Zellner who highlighted problems of using ABM for decision-making in participatory approaches (Moira’s presentation in the ABM session was great, discussing the ‘blow-up’ in her participatory modelling project when the model got too complicated and stakeholders no longer wanted to know what the model was doing under the hood).

I also really enjoyed Mike Goodchild’s Progress in Human Geography annual lecture, in which he reviewed the development of GIScience through his long career and where he thought it should go next (‘Old Debates, New Opportunities’). Goodchild argued (I think) that Geography cannot (and should not) be an experimental science in the mold of Physics, and that rather than attempting to identify laws in social (geographical) science, we should aim to find things that can be deemed to be ‘generally true’ and used as a norm for reducing uncertainty. This is possible because geography is ‘neither uniform nor unique’, but it is repeating. Furthermore, he argued it was time for GIScience to rediscover place and that a technology of place is needed to accompany the (existing) technology of space. This technology of place might use names rather than co-ordinates, hierarchies of places rather than layers of coverages, and produce sketch maps rather than planimetric maps. The substitution of names of places for co-ordinates of locations is particularly important here, as names are social constructs and so multiple (local) maps are possible (and needed) rather than a single (global) map. Goodchild exemplified this using Google Maps, which differs depending on which country you view it from (e.g., depending on what the State views as its legitimate borders). He talked about loads of other stuff, including critical GIS, but these were the points I found most intriguing.

Another way to break up the constant stream of 20-minute project summaries would have been organised fieldtrips around the LA area. However, unlike the landscape ecology conference there is no single time set aside for fieldtrips, and while there are organised trips they’re scheduled throughout the week (simultaneous with sessions). Given such a large conference I guess it would be hard to fit all the sessions into a single week if time were set aside. I didn’t make it to any of the formal fieldtrips, but with Ben Clifford (checkout his new book, The Collaborating Planner?) and Kerry Holden I did manage to find time to hit the beach for some sun. It was a long winter in the UK after all! Now I’m in Auckland it’s warm but stormy; an update about activities here to come in May.

Recursion in society and simulation

This week I visited one of my former PhD advisors, Prof John Wainwright, at Durham University. We’ve been working on a manuscript together for a while now and as it’s stalled recently we thought it time we met up to re-inject some energy into it. The manuscript is a discussion piece about how agent-based modelling (ABM) can contribute to understanding and explanation in geography. We started talking about the idea in Pittsburgh in 2011 at a conference on the Epistemology of Modeling and Simulation. I searched through this blog to see where I’d mentioned the conference and manuscript before, but to my surprise, before this post I hadn’t.

In our discussion of what we can learn through using ABM, John highlighted the work of Kurt Godel and his incompleteness theorems. Not knowing all that much about that stuff I’ve been ploughing my way through Douglas Hofstadter’s tome ‘Godel, Escher and Bach: An Eternal Golden Braid’ – heavy going in places but very interesting. In particular, his discussion of the concept of recursion has taken my notice, as it’s something I’ve been identifying elsewhere.

The general concept of recursion involved nesting, like Russian dolls, stories within stories (like in Don Quixote) and images within images:

Computer programmers of take advantage of recursion in their code, calling a given procedure from within that same procedure (hence their love of recursive acronyms like PHP [PHP Hypertext Processor]). An example of how this works is in Saura and Martinez-Millan’s modified random clusters method for generating land cover patterns with given properties. I used this method in the simulation model I developed during my PhD and have re-coded the original algorithm for use in NetLogo [available online here]. In the code (below) the grow-cover_cluster procedure is called from within itself, allowing clusters of pixels to ‘grow themselves’.

However, rather than get into the details of the use of recursion in programming, I want to highlight two other ways in which recursion is important in social activity and its simulation.

The first, is in how society (and social phenomena) has a recursive relationship with the people (and their activities) composing it. For example, Anthony Gidden’s theory of structuration argues that the social structures (i.e., rules and resources) that constrain or prompt individuals’ actions are also ultimately the result of those actions. Hence, there is a duality of structure which is:

“the essential recursiveness of social life, as constituted in social practices: structure is both medium and outcome of reproduction of practices. Structure enters simultaneously into the constitution of the agent and social practices, and ‘exists’ in the generating moments of this constitution”. (p.5 Giddens 1979)

Another example comes from Andrew Sayer in his latest book ‘Why Things Matter to People’ which I’m also progressing through currently. One of Sayer’s arguments is that we humans are “evaluative beings: we don’t just think and interact but evaluate things”. For Sayer, these day-to-day evaluations have a recursive relationship with the broader values that individuals hold, values being ‘sedimented’ valuations, “based on repeated particular experiences and valuations of actions, but [which also tend], recursively, to shape subsequent particular valuations of people and their actions”. (p.26 Sayer 2011)

However, while recursion is often used in computer programming and has been suggested as playing a role in different social processes (like those above), its examination in social simulation and ABM has not been so prominent to date. This was a point made by Paul Thagard at the Pittsburgh epistemology conference. Here, it seems, is an opportunity for those seeking to use simulation methods to better understand social patterns and phenomena. For example, in an ABM how do the interactions between individual agents combine to produce structures which in turn influence future interactions between agents?

Second, it seems to me that there are potentially recursive processes surrounding any single simulation model. For if those we simulate should encounter the model in which they are represented (e.g., through participatory evaluation of the model), and if that encounter influences their future actions, do we not then need to account for such interactions between model and modelee (i.e., the person being modelled) in the model itself? This is a point I raised in the chapter I helped John Wainwright and Dr Mark Mulligan re-write for the second edition of their edited book “Environmental Modelling: Finding Simplicity in Complexity”:

“At the outset of this chapter we highlighted the inherent unpredictability of human behaviour and several of the examples we have presented may have done little to persuade you that current models of decision-making can make accurate forecasts about the future. A major reason for this unpredictability is because socio-economic systems are ‘open’ and have a propensity to structural changes in the very relationships that we hope to model. By open, we mean that the systems have flows of mass, energy, information and values into and out of them that may cause changes in political, economic, social and cultural meanings, processes and states. As a result, the behaviour and relationships of components are open to modification by events and phenomena from outside the system of study. This modification can even apply to us as modellers because of what economist George Soros has termed the ‘human uncertainty principle’ (Soros 2003). Soros draws parallels between his principle and the Heisenberg uncertainty principle in quantum mechanics. However, a more appropriate way to think about this problem might be by considering the distinction Ian Hacking makes between the classification of ‘indifferent’ and ‘interactive’ kinds (Hacking, 1999; also see Hoggart et al., 2002). Indifferent kinds – such as trees, rocks, or fish – are not aware that they are being classified by an observer. In contrast humans are ‘interactive kinds’ because they are aware and can respond to how they are being classified (including how modellers classify different kinds of agent behaviour in their models). Whereas indifferent kinds do not modify their behaviour because of their classification, an interactive kind might. This situation has the potential to invalidate a model of interactive kinds before it has even been used. For example, even if a modeller has correctly classified risk-takers vs. risk avoiders initially, a person in the system being modelled may modify their behaviour (e.g., their evaluation of certain risks) on seeing the results of that behaviour in the model. Although the initial structure of the model was appropriate, the model may potentially later lead to its own invalidity!” (p. 304, Millington et al. 2013)

The new edition was just published this week and will continue to be a great resource for teaching at upper levels (I used the first edition in the Systems Modeling and Simulation course I taught at MSU, for example).

More recently, I discussed these ideas about how models interact with their subjects with Peter McBurney, Professor in Informatics here at KCL. Peter has written a great article entitled ‘What are Models For?’, although it’s somewhat hidden away in the proceedings of a conference. In a similar manner to Epstein, Peter lists the various possible uses for simulation models (other than prediction, which is only one of many) and also discusses two uses in more detail – mensatic and epideictic. The former function relates to how models can bring people around a metaphorical table for discussion (e.g., for identifying and potentially deciding about policy trade-offs). The other, epideictic, relates to how ideas and arguments are presented and leads Peter to argue that by representing real world systems in a simulation model can force people to “engage in structured and rigorous thinking about [their problem] domain”.

John and I will be touching on these ideas about the mensatic and epideictic functions of models in our manuscript. However, beyond this discussion, and of relevance here, Peter discusses meta-models. That is, models of models. The purpose here, and continuing from the passage from my book chapter above, is to produce a model (B) of another model (A) to better understand the relationships between Model A and the real intelligent entities inside the domain that Model A represents:

“As with any model, constructing the meta-model M will allow us to explore “What if?” questions, such as alternative policies regarding the release of information arising from model A to the intelligent entities inside domain X. Indeed, we could even explore the consequences of allowing the entities inside X to have access to our meta-model M.” (p.185, McBurney 2012)

Thus, the models are nested with a hope of better understanding the recursive relationship between models and their subjects. Constructing such meta-models will likely not be trivial, but we’re thinking about it. Hopefully the manuscript John and I are working on will help further these ideas, as does writing blog posts like this.

Selected Reference

McBurney (2012): What are models for? Pages 175-188, in: M. Cossentino, K. Tuyls and G. Weiss (Editors): Post-Proceedings of the Ninth European Workshop on Multi-Agent Systems (EUMAS 2011). Lecture Notes in Computer Science, volume 7541. Berlin, Germany: Springer.

Millington et al. (2013) Representing human activity in environmental modelling In: Wainwright, J. and Mulligan, M. (Eds.) Environmental Modelling: Finding Simplicity in Complexity. (2nd Edition) Wiley, pp. 291-307 [Online] [Wiley]

Forest gap regeneration modelling

Last week the second of two papers describing our forest tree regeneration, growth, and harvest simulation model was published in Ecological Modelling. These two papers initially started out as a single manuscript, but on the recommendation of a reviewer and the editor at Ecological Modelling we split that manuscript into two. That history explains why this second paper to be published focuses on a component of the integrated model we presented a couple of months ago.

There’s a nice overview of the work these two papers contribute to on the MSU Center for System Integration and Sustainability (CSIS) website, and abstracts and citations for both papers are copied at the bottom of this blog post. Here I’ll go into a little bit more detail on the approach to our modelling:

“The model simulates the initial height of the tallest saplings 10 years following gap creation (potentially either advanced regeneration or gap colonizers), and grows them until they are at least 7 m in height when they are passed to FVS for continued simulation. Our approach does not aim to produce a thorough mechanistic model of regeneration dynamics, but rather is one that is sufficiently mechanistically-based to allow us to reliably predict regeneration for trees most likely to recruit to canopy positions from readily-collectable field data.”

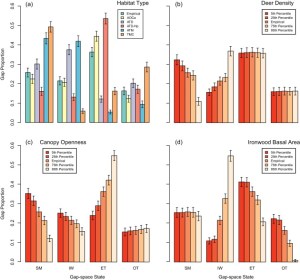

In the model we assume that each forest gap contains space for a given number of 7m tall trees. For each of these spaces in a gap, we estimate the probability that it is in one of four states 10 years after harvest:

- occupied by a 2m or taller sugar maple tree (SM)

- occupied by a 2m or taller ironwood tree (IW)

- occupied by a 2m or taller tree of another species (OT)

- not occupied by a tree 2m or taller (i.e., empty, ET)

To estimate the probabilities of these states for each of the gap spaces, given different environmental conditions, we use regression modelling for composition data:

“The gap-level probability for each of the four gap-space states (i.e., composition probabilities) is estimated by a regression model for composition data (Aitchison, 1982 and Aitchison, 1986). Our raw composition data are a vector for each of our empirical gaps specifying the proportion of all saplings with height >2 m that were sugar maple, ironwood, or other species (i.e., SM, IW, and OT). If the total number of trees with height >2 m is denoted by t, the proportion of empty spaces (ET) equals zero if t > n, otherwise ET = (n − t)/n. These raw composition data provide information on the ratios of the components (i.e., gap-space states). The use of standard statistical methods with raw composition data can lead to spurious correlation effects, in part due to the absence of an interpretable covariance structure (Aitchison, 1986). However, transforming composition data, for example by taking logarithms of ratios (log-ratios), enables a mapping of the data onto the whole of real space and the use of standard unconstrained multivariate analyses (Aitchison and Egozcue, 2005). We transformed our composition data with a centred log-ratio transform using the ‘aComp’ scale in the ‘compositions’ package (van den Boogaart and Tolosana-Delgado, 2008) in R (R Development Core Team, 2009). These transformed data were then ready for use in a standard multivariate regression model. A centred log-ratio transform is appropriate in our case as our composition data are proportions (not amounts) and the difference between components is relative (not absolute). The ‘aComp’ transformation uses the centred log-ratio scalar product (Aitchison, 2001) and worked examples of the transformation computation can be found in Tolosana-Delgado et al. (2005).”

One of the things I’d like to highlight here is that the R script I wrote to do this modelling is available online as supplementary material to the paper. You can view the R script here and the data we ran it for here.

If you look at the R script you can see that for each gap, proportions of gap-spaces in the four states predicted by the regression model are interpreted as the probability that gap-space is in the corresponding state. With these probabilities we predict the state of each gap space by comparing a random value between 0 and 1 to the cumulative probabilities for each state estimated for the gap. Table 1 in the paper shows an example of this.

With this model setup we ran the model for scenarios of different soil conditions, deer densities, canopy openness and Ironwood basal area (the environmental factors in the model that influence regeneration). The results for these scenarios are shown in the figure below.

Hopefully this gives you an idea about how the model works. The paper has all the details of course, so check that out. If you’d like a copy of the paper(s) or have any questions just get in touch (email or @jamesmillington on twitter)

Millington, J.D.A., Walters, M.B., Matonis, M.S. and Liu, J. (2013) Filling the gap: A compositional gap regeneration model for managed northern hardwood forests Ecological Modelling 253 17–27

doi: 10.1016/j.ecolmodel.2012.12.033

Regeneration of trees in canopy gaps created by timber harvest is vital for the sustainability of many managed forests. In northern hardwood forests of the Great Lakes region of North America, regeneration density and composition are highly variable because of multiple drivers that include browsing by herbivores, seed availability, and physical characteristics of forest gaps and stands. The long-term consequences of variability in regeneration for economic productivity and wildlife habitat are uncertain. To better understand and evaluate drivers and long-term consequences of regeneration variability, simulation models that combine statistical models of regeneration with established forest growth and yield models are useful. We present the structure, parameterization, testing and use of a stochastic, regression-based compositional forest gap regeneration model developed with the express purpose of being integrated with the US Forest Service forest growth and yield model ‘Forest Vegetation Simulator’ (FVS) to form an integrated simulation model. The innovative structure of our regeneration model represents only those trees regenerating in gaps with the best chance of subsequently growing into the canopy (i.e., the tallest). Using a multi-model inference (MMI) approach and field data collected from the Upper Peninsula of Michigan we find that ‘habitat type’ (a proxy for soil moisture and nutrients), deer density, canopy openness and basal area of mature ironwood (Ostrya virginiana) in the vicinity of a gap drive regeneration abundance and composition. The best model from our MMI approach indicates that where deer densities are high, ironwood appears to gain a competitive advantage over sugar maple (Acer saccharum) and that habitat type is an important predictor of overall regeneration success. Using sensitivity analyses we show that this regeneration model is sufficiently robust for use with FVS to simulate forest dynamics over long time periods (i.e., 200 years).

Millington, J.D.A., Walters, M.B., Matonis, M.S. and Liu, J. (2013) Modelling for forest management synergies and trade-offs: Northern hardwood tree regeneration, timber and deer Ecological Modelling 248 103–112

doi: 10.1016/j.ecolmodel.2012.09.019

In many managed forests, tree regeneration density and composition following timber harvest are highly variable. This variability is due to multiple environmental drivers – including browsing by herbivores such as deer, seed availability and physical characteristics of forest gaps and stands – many of which can be influenced by forest management. Identifying management actions that produce regeneration abundance and composition appropriate for the long-term sustainability of multiple forest values (e.g., timber, wildlife) is a difficult task. However, this task can be aided by simulation tools that improve understanding and enable evaluation of synergies and trade-offs between management actions for different resources. We present a forest tree regeneration, growth, and harvest simulation model developed with the express purpose of assisting managers to evaluate the impacts of timber and deer management on tree regeneration and forest dynamics in northern hardwood forests over long time periods under different scenarios. The model couples regeneration and deer density sub-models developed from empirical data with the Ontario variant of the US Forest Service individual-based forest growth model, Forest Vegetation Simulator. Our error analyses show that model output is robust given uncertainty in the sub-models. We investigate scenarios for timber and deer management actions in northern hardwood stands for 200 years. Results indicate that higher levels of mature ironwood (Ostrya virginiana) removal and lower deer densities significantly increase sugar maple (Acer saccharum) regeneration success rates. Furthermore, our results show that although deer densities have an immediate and consistent negative impact on forest regeneration and timber through time, the non-removal of mature ironwood trees has cumulative negative impacts due to feedbacks on competition between ironwood and sugar maple. These results demonstrate the utility of the simulation model to managers for examining long-term impacts, synergies and trade-offs of multiple forest management actions.

Spatial Feedbacks (Love it or Hate it?)

I was hoping to make my first blog post of the year about the latest paper to come out of my work in Michigan. The paper is entitled, Filling the gap: A compositional gap regeneration model for managed northern hardwood forests and is forthcoming in Ecological Modelling. Unfortunately, despite being accepted for publication by the editors some time before Christmas, the manuscript seems to have got lost in the production system and has been delayed. If all goes to plan the paper will be out in time for February’s blog post. Instead, today I’ll highlight some other recent activities.

Between Christmas and New Year I took a bit of time to finish off a paper I was invited to submit to a special issue of Ecology and Society. The special issue will be entitled, Exploring Feedbacks in Coupled Human and Natural Systems (CHANS) and will bring together multiple different approaches for accounting for feedbacks in CHANS modelling and applications. The CHANS research framework emphasizes the importance of reciprocal human-nature interactions and the need for holistic study of humans and nature. Feedback loops can be formed in CHANS when information about one system component produces a change in a second component, which in turn provides information which produces a change in the original component.

Feedbacks loops between human and natural components of coupled systems are a primary reason that humans and nature must be investigated together to properly understand their temporal dynamics. However, as a geographer I’m also interested in the role space plays in system dynamics. It seems that there haven’t been any broad overviews or analyses of spatial feedbacks for CHANS, so I set out to produce one with the goal of improving understanding about the issue.

After a couple of drafts with very useful comments from the editors of the special issue and colleagues George Perry and David O’Sullivan, I arrived at a manuscript entitled, Three types of spatial feedback loop in coupled human and natural systems. As the title suggests, after identifying some of the key characteristics of feedbacks, I conceptualize and describe three types of spatial feedback loop. These three types address the areal growth of system entities, the importance of transport costs across space, and how spatial patterns can create feedback loops with spatial spread processes.

I won’t go into the details of these now as the manuscript is still under peer review (I think it’s a bit of a Marmite manuscript – they’ll either love it or hate it). However, I will highlight some of the simple spatial simulation models I used to help me conceptualize the feedbacks and which should be useful to help readers do the same (along with the real world examples I used). You can play with the simulation models yourself as they are freely available online. Download the models and their source code for use with NetLogo from http://www.openabm.org/models/eschansfeedback/tag, or use them online without downloading NetLogo from http://modelingcommons.org/tags/one_tag/166. I think these simple spatial simulations should be far more helpful for understanding spatio-temporal dynamics – inherent to spatial feedbacks – than the figures I present in the paper (like that below). See what you think. We’ll find out whether the reviewers love it or hate it in a month or two.

Since the New Year, I’ve spent most of my time working on undergraduate modules I’ll be teaching later this term. In particular, I’m developing a new module named Spatial Data and Mapping for the Principles of Geographical Inquiry course. In the module I’ll introduce students to some of the methods, tools and technologies available to collect and present spatial data. These include GPS and remote sensing (e.g., orthophotos) on the collection side and EDINA Digimap and ArcMap on the presentation side of things. Alongside lectures, there will be plenty of opportunity for students to use these tools as they will collect their own data from London’s Southbank which they will then use to create a digital map. It’s the first time running the module so there may be some teething issues, but hopefully the students will find it interesting and useful for their future studies.

I’m also teaching a PhD-level short course for the KISS-DTC entitled, Social Simulation. The course will provide an introduction to the use of computer simulation methods – notably agent-based modelling – for questions germane to social scientists. I won’t go into detail on that now, maybe in future.

Finally, I’ll just highlight some new urlists I’ve been making as resources for myself and students (and maybe you?). Urlist is a collaboration tool to collect, organize and share lists of links which I’ve found quite handy. I’ve started lists on Open Data (freely available for analysis), Spatial Data and Geodata resources and tools, and Valuation of Ecosystem Services. The Open Data list is collaborative so anyone can contribute relevant links – if you know good Open Data sources online that aren’t listed there please feel free to add!

Wrapping up 2012

Nearing the end of 2012 and the total number of posts on this blog has been even fewer this year than in 2011. At least I have been tweeting a bit more of late. Here’s a quick round-up of activities and publications since my last post with a look at some of what’s going on in 2013.

The Geoforum paper on narrative explanation of simulation modelling is now officially published, as is the first of two Ecological Modelling papers on the Michigan forest modelling work. Citations and abstract for both are below, and are included on my updated publications list. I’ll post more details and info on each in the New Year (promise!). I’ll likely wait to summarise the Michigan paper until the second paper of that couplet is published – hopefully that won’t be too long as it’s now going through the proofs stage.

The proceedings for the iEMSs conference I attended in Leipzig, Germany, this summer are now online. That means that the two papers I presented there are also available. One paper was on the use of social psychology theory for modelling farmer decision-making, and the model I discuss in that paper is available for you to examine. The other paper was a standpoint contribution to a workshop on the place of narrative for explaning decision-making in agent-based models. From that workshop we’re working on a paper to be published in Environmental Modelling and Software about model description methods for agent-based models. More on that next year too hopefully.

In one of my earlier posts this year I talked about agent-based modelling spatial patterns of school choice (I’ll get the images for that post online again soon… maybe). I’ve managed to write up the early stages of that work and have submitted it to JASSS. We’ll see how that goes down. I hope to continue on that work in the new year also, possibly while in New Zealand at the University of Auckland. I’ll be in Auckland visiting and working with George Perry and David O’Sullivan, with whom I published the recent Geoforum paper (highlighted above). On the way to New Zealand I’ll be stopping off in Los Angeles for the Association of American Geographers conference which I haven’t been to previously and which should be interesting.

So that’s it for 2012. A New Year’s resolution for 2013 – post at least once every month on this blog! Especially from Down Under.

Happy Holidays!

Abstracts

Millington, J.D.A., O’Sullivan, D., Perry, G.L.W. (2012) Model histories: Narrative explanation in generative simulation modelling Geoforum 43 1025–1034

The increasing use of computer simulation modelling brings with it epistemological questions about the possibilities and limits of its use for understanding spatio-temporal dynamics of social and environmental systems. These questions include how we learn from simulation models and how we most appropriately explain what we have learnt. Generative simulation modelling provides a framework to investigate how the interactions of individual heterogeneous entities across space and through time produce system-level patterns. This modelling approach includes individual- and agent-based models and is increasingly being applied to study environmental and social systems, and their interactions with one another. Much of the formally presented analysis and interpretation of this type of simulation resorts to statistical summaries of aggregated, system-level patterns. Here, we argue that generative simulation modelling can be recognised as being ‘event-driven’, retaining a history in the patterns produced via simulated events and interactions. Consequently, we explore how a narrative approach might use this simulated history to better explain how patterns are produced as a result of model structure, and we provide an example of this approach using variations of a simulation model of breeding synchrony in bird colonies. This example illustrates not only why observed patterns are produced in this particular case, but also how generative simulation models function more generally. Aggregated summaries of emergent system-level patterns will remain an important component of modellers’ toolkits, but narratives can act as an intermediary between formal descriptions of model structure and these summaries. Using a narrative approach should help generative simulation modellers to better communicate the process by which they learn so that their activities and results can be more widely interpreted. In turn, this will allow non-modellers to foster a fuller appreciation of the function and benefits of generative simulation modelling.

Millington, J.D.A., Walters, M.B., Matonis, M.S. and Liu, J. (2013) Modelling for forest management synergies and trade-offs: Northern hardwood tree regeneration, timber and deer Ecological Modelling 248 103–112

In many managed forests, tree regeneration density and composition following timber harvest are highly variable. This variability is due to multiple environmental drivers – including browsing by herbivores such as deer, seed availability and physical characteristics of forest gaps and stands – many of which can be influenced by forest management. Identifying management actions that produce regeneration abundance and composition appropriate for the long-term sustainability of multiple forest values (e.g., timber, wildlife) is a difficult task. However, this task can be aided by simulation tools that improve understanding and enable evaluation of synergies and trade-offs between management actions for different resources. We present a forest tree regeneration, growth, and harvest simulation model developed with the express purpose of assisting managers to evaluate the impacts of timber and deer management on tree regeneration and forest dynamics in northern hardwood forests over long time periods under different scenarios. The model couples regeneration and deer density sub-models developed from empirical data with the Ontario variant of the US Forest Service individual-based forest growth model, Forest Vegetation Simulator. Our error analyses show that model output is robust given uncertainty in the sub-models. We investigate scenarios for timber and deer management actions in northern hardwood stands for 200 years. Results indicate that higher levels of mature ironwood (Ostrya virginiana) removal and lower deer densities significantly increase sugar maple (Acer saccharum) regeneration success rates. Furthermore, our results show that although deer densities have an immediate and consistent negative impact on forest regeneration and timber through time, the non-removal of mature ironwood trees has cumulative negative impacts due to feedbacks on competition between ironwood and sugar maple. These results demonstrate the utility of the simulation model to managers for examining long-term impacts, synergies and trade-offs of multiple forest management actions.

Social simulation: what criticism do we get?

This week on the SIMSOC listserv was a request from Annie Waldherr & Nanda Wijermans for modellers of social systems to complete a short questionnaire on the sort of criticism they receive. The questionnaire is only two short questions, one asking what field you are in and the other asking you to ‘Describe the criticism you receive. For instance, recall the questions or objections you got during a talk you gave. Feel free to address several points.’

Here was my quick response to the second question:

1) Too many ‘parameters’ in agent-based models (ABM) make them difficult to analyse rigorously and fully appreciate the uncertainty of (although I think this kind of statement highlights the mis-understanding some have of how ABM can be structured – often models of this type are more reliant on rules of interactions between agents than individual parameters).

2) The results of models are seen as being driven by the assumptions of the modeller than by the state of the real world. That is, modellers may learn a lot about their models but not much about the real world (see similar point made by Grimm [1999] in Ecological Modelling 115)

I think it would have been nice to have a third question offering an opportunity to suggest how we can, or should, respond to these critisisms. Here’s what I would have written if that third question was there:

To address point 1) above we need to make sure that we:

i) document our models comprehensively (e.g., via ODD) so that others understand model structure and can identify likely important parameters/rules and assumptions;

ii) show that the model parameter space has been widley explored (e.g., via use of techniques like Latin hypercube sampling).

To address 2) we need to make sure that:

iii) when documenting our models (see i) we fully justify the rationale of our models, hopefully with reference to real world data;

iv) we acknowledge and emphasise that the current state of ABM means that usually they can be no more than metaphors or sophisticated analogies for the real world but that they are useful for providing alternative means to think about social phenomena (i.e., they have heuristic properties).

If you’re working in this area go and share your thoughts by completing the short questionnaire , or leaving comments below.